Today we're joined by Dr. Rob Johnston. He's an anthropologist, an intelligence community veteran, and author of the cult classic Analytic Culture in the US Intelligence Community, a book so influential that it's required reading at DARPA. But first and foremost, Johnston is an ethnographer. His focus in that book is on how analysts actually produce intelligence analysis.

Johnston answers a lot of questions I've had for a while about intelligence:

Why do we seem to get big predictions wrong so consistently?

Why can't the CIA find analysts who speak the language of the country they're analyzing?

Why do we prioritize expensive satellites over human intelligence?

We also discuss a meta-question I often return to on Statecraft: Is being good at this stuff an art or a science? By “this stuff,” in this case I’m referring to intelligence analysis, but I think that the question generalizes across policymaking: would more formalizing and systematizing make our spies, diplomats, and EPA bureaucrats better? Or would it lead to more bureaucracy, more paper, and worse outcomes? How do you build processes in the government that actually make you better at your job?

Thanks to Harry Fletcher-Wood for his judicious edits.

For a printable transcript of this interview, click here:

I want to start with a very simple question: What's wrong with American intelligence analysis today?

That's an interesting question. I'm not sure that there's as much wrong as there once was, and I think what is wrong might be wrong in new and interesting ways.

The biggest problem is communicating with policymakers. Policymakers have remarkably short attention spans. They're conditioned by a couple of things that the intelligence community can't control. They want to know, "Is X, Y, or Z going to blow up or not?" That's fine. However, if you say, "Yes, probably in 10 years," there's nothing a US policymaker can do about it. "Oh God, 10 years from now, I can't think about 10 years from now. I've got to worry about my next election."

If you say, "Oh, by the way, you've got 24 hours," they think, "Oh God, I can't do anything about it. It's too late. I don't have a lever to pull to effect change in 24 hours."

So there's always this timing-teaming problem. If I give a policymaker 2–3 weeks, that's optimal space for the policymaker. But a lot of the consumers of intelligence aren't savvy enough consumers to know that they should ask for that: "In the next three weeks, lay out the three different trends that might occur in Country X, and tell me what the signposts are for each of those so that I can make some adjustments based on ground truth. So, if we see X occur, it indicates that there's a greater probability that Y will occur versus A or B."

The questions from policymakers range from "Whither China?" which is so broad as to be almost meaningless, all the way down to "Here's this weapon platform. Do we know if this weapon platform is at this location?” The "Whither China?" questions are always driven by poor tasking.

That communication between the consumer and the producer needs a lot of focus and a lot of work. In my experience, it's always an intelligence failure and a policy success — it's never a policy failure. The first to get thrown under the bus is the intelligence community.

Could you define tasking for me? As I understand it, I'm the consumer of some product from the intelligence community, and I “task” you with providing it, right?

Generally speaking, yeah. Our consumers are policymakers, either civilian or military, and they're tasking.

And “teaming” is how people get assigned to tasks?

There are a couple of different ways it works. I can't speak to the current administration, but in the past, there’s been the “President's Intelligence Priorities.” Every president has a list, "These are the 10 things I really care about." The community puts together the National Intelligence Priority Framework, and it says, "Okay, community, in all of the world of threat and risk, what do we really care about?" They lay that out. There are some hard targets: Russia, China, North Korea, Iran, the usual suspects. “All right, let's merge those two lists, and then we will resource collection and analysis based on them.” It's a fairly rational, albeit slow process, to arrive at some agreed-upon destination over the next year, two years, four years, whatever it happens to be.

So the product of that workflow is a set of decisions, “We're going to staff this question with this many people, and this question with fewer people.”

That's exactly right. And that leaves certain things at risk. A good example of that is the Arab Spring. If you think about a protester self-immolating in Tunisia, the number of analysts really focused on Tunisia at that moment in time was minimal.

Give me a ballpark estimate. How many analysts would've been thinking about Tunisia week to week?

Honestly, within the community, maybe a dozen. At my old shop, the CIA, it was half of one FTE [Full Time Equivalent] for a period of time. Tunisia's not high on the list of US concerns. The issue is that was a trigger for a greater event, the Arab Spring. When that happens, it's affectionately referred to as, "Cleanup on aisle eight": there's some crisis, and we have to surge a bunch of people to that crisis for some period of time. Organizations try to plan so that they can staff around crises, knowing that we're not going to catch everything because we don't have the resources or personnel or programs.

We don't have global coverage per se. HUMINT [human intelligence: collecting information through individuals on the ground] is slow, meticulous, and specific. If you're going to dedicate human resources to something, it's usually a big something. Case officers go out and find some spies to help us with collection on Country X. It’s a very long and methodical process. We may not have resources in Tunisia at any given time because it just isn't high on our list. And then we get a Black Swan event out of nowhere. The immolation triggers a whole bunch of protests, and then [Hosni] Mubarak [the president of Egypt] falls.

The administration says, "You didn't tell us Mubarak was going to fall." The response is, "We've been telling you for 10 years that Mubarak is going to fall. The Economist has been telling you for 10 years. Everybody on Earth knows that Mubarak can't stay in power based on his power structure. But we can't predict what day it's going to happen.” We would love to. But, realistically, we all recognize that Mubarak is very weak, is hanging on by a thread, so at the right tipping point, he's going to go. But the notion that somehow the community missed it is fictitious. That's generally a policy utterance, you know? "Oh, the community missed it." Well, not really.

You mentioned this problem — that until you have a cleanup on aisle eight, you’re not monitoring important things — is a feature of the way that these priorities get put together. Are there other blind spots or weaknesses as a result of how the intelligence community sets priorities?

There are other weak spots. I think the biggest misconception about the community and the CIA in particular is that it's a big organization. It really isn't. When you think about overstuffed bureaucracies with layers and layers, you're describing other organizations, not the CIA. It is a very small outfit relative to everybody else in the community.

Put some numbers on that?

I can't actually, that one's classified. I don't even know a good analogue. I don't know how many analysts the FBI has, but it can't be that many. I'm not even sure what the real number is today.

If the CIA is relatively small compared to what we ask of it, and not everything is a priority, how should the intelligence community prepare for these kinds of Black Swan events? How could it do a better job of prediction and analysis?

I think there's an opportunity to employ a lot of technology for global coverage that we are not yet using, or that I'm not aware of us using. I suspect that places like Polymarket [a prediction market where users can bet on the probability of events] are interesting training grounds for agentic AI, or for an analyst alert. You can broaden your scope of data consumption by going outside of traditional pipelines.

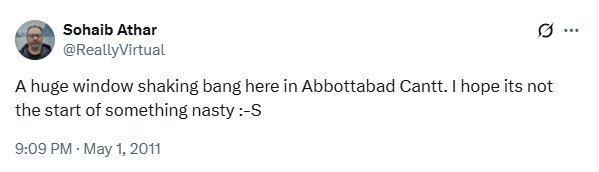

One thing has changed in the most positive way, and that's the utilization of open source [publicly available material]. Using open source was a tough ask 20 years ago. People doubted its value compared to expensive technical collection. They assumed that billions of dollars on a satellite would solve a problem that it could not solve, when in fact, open source was clearly a better avenue into knowledge. The very first public reporting about the bin Laden raid was on Twitter. It was people in Abbottabad who were witnessing this and tweeting live that this was happening.

We've talked about intelligence gathering on this show with Edward Luttwak, a longtime intelligence community observer, with Laura Thomas, who was CIA Chief of Base in Afghanistan, and with Jason Matheny — who I believe you know — who was head of IARPA, now head of the RAND Corporation. They’ve offered different diagnoses of intelligence community problems. I want to hear your view.

The diagnosis that Thomas and Luttwak made was that we don't have enough area expertise — enough language speakers, or people who understand the places they're analyzing. Laura Thomas talked about it in Afghanistan; Luttwak described how, in several Central Asian embassies, the State Department and intelligence folks didn't speak the local language, only Russian, and they spoke Russian badly. That’s one diagnosis.

Then there's another diagnosis, which Matheny talked a lot about: he tried to build prediction markets within the intelligence community. He had a successful trial, and then it got shuttered. His idea was, we need to build better systems internally for rewarding predictive accuracy. We don't do that very well: we reward getting into the President's Daily Brief, or other short-term products.

What do you think of those theses?

I tend to agree that we have a talent issue. It’s a confluence of security and secrecy requirements, suitability for the work, and our ability to clear people to work. It's also a reluctance to engage folks that seem dissimilar from us. You want different cognitive perspectives all operating together to try to work through a problem. It's okay to have conflict, disagreement, and heartfelt discussions about whatever you're working on, as long as you manage it. That should be expected.

The problem is if you never get there. If the entire recruitment, selection, and security process weeds out anybody who doesn't look like me, or doesn't have a doctorate, or something like that, we need to address that. I wouldn't be surprised if we could find clever ways to air-gap1 the most secret work that we're doing from the less secret work, so that those two can come together in some way. I think that's reasonable.

How does the intelligence community assess its predictions in retrospect and do a “hot wash,” an after-action review of predictions?

I had the great fortune of starting the Lessons Learned program at the CIA and running that for some time, and then running the one at the DNI [Director of National Intelligence office] as well. That was a first attempt to get the agency and the community writ large to buy into the notion. The Army does this after every exercise. They go to Fort Irwin [the National Training Center], run a simulation, sit down, and do a hot wash at the end of the exercise. They wake up and do it all over again. It's not an unusual thing for the services: the Army, the Navy, they all do it. The idea of Lessons Learned was, “Let's get the community to buy into that process.” Part of it was centralized, and part of it was, “Let's just do this in your office. You don't need to involve us. You can just do it on your own.”

But the point is, you build the habit of looking back at your predictions and how you got to them and reassessing?

The forecasts that we do are not just analytic forecasts. They're forecasts of everything. If I've got an operational plan, there are forecasts about, "Is it safe to go here? Am I being followed?" all that kind of stuff. So when you're making those kinds of decisions and predictions, why not? It's universal.

Before you launched the Lessons Learned program, did this stuff not exist at all? Was it just not formalized?

There has been a history program at the agency since the ‘50s. They’ve had a staff historian, but the history time requirements are different than Lessons Learned. The historians would prefer to look at the missile gap [of nuclear weapons, between the USA and the Soviet Union] in the ‘70s or ‘60s. They like to think about that frame of time. What happened yesterday, after we did this thing, is less likely to show up on their radar. That gap is where the Lessons Learned program was trying to focus — somewhere between the old historical records and archives and today's operations and analysis. They were doing it ad hoc and without a routine that the military uses. So this was formalization, but it was also different capabilities: our methodology, process, and product was different.

What kinds of lessons were consistently learned in the Lessons Learned program?

There's an argument that the lessons learned are more accurately described as lessons collected or lessons archived, rather than learned.

Because learning institutionally is hard?

Learning institutionally is hard. Not only is it hard to do, but it's also hard to measure and to affect. But, if nothing else, practitioners became more thoughtful about the profession of intelligence. To me, that was really important. The CIA is well represented by lots of fiction, from Archer to Jason Bourne. It's always good for the brand. Even if we look nefarious, it scares our adversaries. But it's super far removed from reality. Reality in intelligence looks about as dull as reality in general. Being a really good financial or business analyst, any of those kinds of tasks, they're all working a certain part of your brain that you can either train and improve, or ignore and just hope for the best.

I don't think any of those are dull, but I take your point about perception vs. reality.

I don't mean to suggest those are dull, but generally speaking, they don't run around killing assassins. It's a lot less of that.

What do American intelligence analysts do if not the fun stuff from the Bourne movies?

They read, they think, they write. They write some more, they edit, they get told their writing sucks. They go back, they start over again. Some manager looks at it and says, "Is this the best you can write?" And they say, “No.” And they hand it back to them, and off they go to write it again. It’s as much of a grind as any other analytic gig. You're reading, thinking, following trends, looking for key variables.

Analysts who are good on their account generally have picked up very specific tips and tricks that they may not even be able to articulate. The best performers in the agency had a very difficult time explaining how it was they went about their analysis, and articulating their expertise. That's not unusual. Experts really aren't very good at articulating why or how they're experts, but we do find that after 10,000-ish cases, they get better, because they're learning what to look for and what not to.

That comes with some penalties. The more hyper-focused you are on topic X, the less likely you are to think that topic Y is going to affect it. And often it's topic Y that comes in orthogonally and makes chaos. “How do you create expert-novice teams?” was a question that we struggled with: finding the right balance between old and new hands, because you wanted the depth of expertise along with the breadth of being a novice. Novices would try anything because nobody told them they couldn't. That's a very valuable thing to learn from. If you're an analyst or an analytic manager, the challenge is how to balance that structure.

You wrote this great ethnographic study of the intelligence community, called Analytic Culture in the US Intelligence Community, back in 2005. It's assigned reading at DARPA, the Defense Advanced Research Projects Agency, among other places. It’s a spinout of the Lessons Learned program. In the book, you focus on how much of this expertise is implicit: not formalized, and pretty hard to formalize.

How are you supposed to formalize mentorship or shadowing in intelligence work, if people don't even have a clear sense themselves of why they're good or not?

You're right, people aren't very good at that. They don't describe it well, or even really understand it very well. What I had hoped for 20 years ago was technology to shadow the analysts. You would have what would today be agentic AI: a digital twin that would consume information and be aligned with your decision criteria as you make decisions. It would be trained on you doing your day job and doing exercises and training. The algorithm would create a fine-tuned model of your decision criteria, and you'd have to go back and adjust it — it's not permanent. Eventually, there'll be overfitting, there are all those kinds of problems.

So today, I would probably be advocating for something like using large language models to help create digital twin-like entities for analysts as they're learning their job. So that when I'm not there — I've been run over by a bus, I've retired, I'm sick — and somebody wants to tap into whatever elements of my decision criteria they're interested in, they should be able to. That's the ideal. There are lots of security issues, and I would fully expect both IT and security to go nuts. I'm sure that it would take years of negotiation. But I don't see why we couldn't get there.

A year ago I talked to Dan Spokojny, who worked at the Department of State, and we discussed how learning happens there. State is also trying to figure out what's going to happen in a country, and their memo system is hopelessly arcane. There's no codification of how information comes in or out. There's not really any system for assessing, “Was this desk chief especially good at predicting?”

I had some of the same questions for him: What are the limits of building information management tools? How much can you improve efficacy by treating it like a science?” My sense is, you can definitely be more rigorous about data and trying to learn from it, without saying that digital versions of analysts would be better than human analysts.

I'm not sure that a digital version would be better than our analysts. I don't look at AI as a replacement technology for genuinely complex cognitive tasks. There are issues about hallucinations and overfitting and all of that. But if you understand what the real guardrails are for our use case, it will help you figure out good ways to implement it.

Ideally, we should probably offload as much transactional work as we can into automation and free up more time for thinking. The problem is, it's hard to sell that to anybody. We say, “What if we can cut two hours out of every analyst’s day just by getting rid of the transactional work?” Instead of filling out forms, they could be thinking deeply about this problem, or reading, or learning the language, or be in-country, getting firsthand experience. Lots of things they could be doing instead of filling out forms.

When you go to get your budget and tell them, "We're going to liberate two hours for people to think," you get blank stares. You go to the SSCI, the Senate Select Committee on Intelligence, or the HPSCI, the House Permanent Select Committee on Intelligence. You say, "We're going to free up time for analysts to spend more time thinking." In their heads, they're like, "Oh, great. What do we have to show for that?"

How can we demonstrate that two hours more of thinking is going to change our ability to deal with crises, or make forecasts, or negotiate? How do we know that there's a payoff there? There are ways to measure, there are KPIs, but they're not flashy or sexy. You're not going to roll into DARPA and say, "Hey, give me a gazillion dollars because I've got this great idea to give people time to think." That's not what people want to hear.

In your book, you talk about the cognitive biases that create issues in intelligence, like confirmation bias. Let’s say I'm an intelligence analyst, working on some tasking. How does confirmation bias show up?

It can be subtle and it can be not so subtle. The subtle version is, it’s been a couple of years, I’ve rotated jobs, I come to a new desk, and a new analytic topic. I'm going to read in — read all the stuff that has been produced around that account before I got there. That'll give me a good idea of what the community has been saying. We call that the analytic line. If we deviate a lot from that narrative, we have to have very concrete reasons why we deviate, because that's been a consistent finding across people over time. The subtle problem is, every time I read in to that, I'm conditioning myself to think about the problem in the way we have been thinking about the problem. The more I do that, the more I fall into the same traps that everybody else has fallen into. I'm now consuming all of the things they've produced, and that is having an effect on my thinking about the account.

This seems like a tricky problem to solve, because most of the time, I'm guessing you do want to follow the party line. Most of the time getting read in is making you better. The problem is that the intelligence community, fairly or not, is judged on the times that are not like most times.

That's very true. If we took the notion of shorting the market every day, eventually we're going to make a lot of money. Up until that day, we're going to lose a lot of money. We could do analysis like that, but the problem is what happens for the other 364 days. You have to have a lot of money to play that game. Or in the case of the agency or the community, you have to have a lot of people and resources. If you have a constrained budget, workforce, and pipeline, between the people who are onboarding and those who are retiring, you don't have the capital to play the Black Swan game. To be more attuned to that will take considerably more resources than the community has, or has ever had. And generally speaking, those resources are driven towards collection platforms, not towards humans.

Tell me about that. My impression is that the trend in the intelligence community has been, “HUMINT is hard and costly. Sometimes China gets all our spies and kills them. Signals intelligence is expensive, but it's relatively risk-free.”

As a result, the trend has been more signals intelligence, more exquisite satellites, fewer people on the ground. Language acquisition is less of a priority. Is that roughly right?

I think you're correct. The problem is that it's a bit of a false choice. The community thinks that if we have signals intelligence, if we can get a conversation between two influential parties within a country, that conversation is going to be more revealing than the human source. But that assumes that the people in that conversation don't just lie to each other all the time.

A great example of that is Saddam Hussein and weapons of mass destruction. Kevin Woods wrote a book called the Iraqi Perspectives Project. Saddam had recorded all of his meetings, and Woods had access to all the recordings from those senior military-Saddam interactions. In every single one, Saddam's generals were lying to Saddam, because they all wanted to live. He would say, "How's our nuclear program?" And the generals would say, "Oh, it's going great, boss." Or "How's our chem-bio program?" "Oh, our chem-bio program's just wonderful. We're doing great work. It'll scare everybody." None of that is factually accurate. It just wasn't true. If all we're doing is listening in on conversations like that, we're going to be just as bamboozled as Saddam was. I fear that when we think, "Technical collection gets us truth." Technical collection gets us access. I don’t know if it gets us truth.

The budget impetus [is important]. If I'm going to build a satellite and I can engage subcontractors in 43 states, versus hiring a thousand more analysts, what do you think I'm going to do? I'm a politician. The thousand analysts aren't going to come from my district, so I'm going to go for the satellite MacGuffin, whatever it happens to be.

What characterizes really good analysts at the CIA or in the intelligence community? What do we know about the folks who bat above average?

The people who are really good understand sourcing and how important it is for critical thinking. The education should be focused on helping people recognize and refute bullshit. Step one is the critical thinking necessary to say, "This makes no sense," or "This is just fluff." The people who are professionally trained to be really good at understanding the quality and history of a source, and to understand the source's access to information or lack of, are librarians. We should probably steal shamelessly from librarians. Data journalism, same thing. There are lots of parallel professions where we could be learning more to improve our own performance.

The folks that I've seen who crush it, they’re like a dog with a bone. They will not let go. They’ve got a question, they're going to answer the question if it kills them and everybody else around them. It’s a kamikaze thing. Those people, the tenacious ones who care about sources and have critical thinking skills, or at least tools to help them think critically, seem the highest performers to me. As a rule, they all keep score. It’s part of their process.

There's a dynamic that happens in professional fields where, if you're really good at analysis, you get moved into management. I was reading Ghettoside, by Jill Leovy, which talks about detective work in LA and Compton, and it’s dramatic how the detectives who are really good at finding murderers are taken off the streets by their own success — they get promoted into management. Is that something that the intelligence community tries to prevent?

It's a perennial problem that if you succeed wildly, you're going to get promoted. The problem is that the very skills that make you a super analyst may make you a terrible manager. There's no indicator they will transfer. The problem is incentivizing and providing a career path for the people who are super at analytic tasks.

Anywhere in the government, there’s the Senior Executive Service: you go from the General Service pay scale from GS-1 to GS-15. After GS-15, you go into the Senior Executive scale. How do we get analysts on a path to make it to the senior level when their day job is analysis? We don't want them managing anything.

Ideally, you would just pay them a lot more money to do that work?

That would be the best-case scenario. The people who have managed that very well, and probably it's a result of budgetary pressure, are the State Department’s INR [the Bureau of Intelligence and Research; the acronym INR reflects its name before it became a bureau]. They have really good analysts.

It's the intelligence branch in the State Department. Their analysts work on an account for years. They might spend their entire career working one account. That's unusual — it's not replicated everywhere in the community. It probably should be. There should be a cadre of senior analysts who work an account their entire career, because they love the account and they love solving those problems.

The State Department rotates the folks who staff embassies every couple of years. One of the reasons for that constant rotation is to avoid a Foreign Service Officer getting attached to the nation he is working in and developing some sort of dual-loyalty problem, or a bias that clouds judgment. Is that why people move accounts at the CIA?

I'm sure that plays in the back of some people's minds. But, in my experience, the notion is, if we treat analysis as its own fundamental skill, they can be an analyst on any account, they just need time. I don't think that's entirely accurate, but that was the running narrative: rotating every 2-3 years was to help you broaden your horizons and become a better generalist in the world of analytics.

If you start on an account like Honduras, you work Honduras for a couple of years and go on to the next account. The next account is maybe more important on the international stage, or a little more dangerous, the diplomacy is higher stakes, and so on. Eventually you wind up in management. The path to management is to be a really good generalist. If you want to make Senior Executive Service, most of the promotions are management promotions, not practitioner promotions. The carrot that we've dangled is: “If you want to get promoted, you’ve got to be a generalist, to work a lot of accounts, to demonstrate your chops in a bunch of different places.” That’s great for the career, but not so great for the account.

Will you define accounts more precisely for me? Is an account a country? Can it be a region, a topic?

It can be any of those. It can be a functional area, like weapons of mass destruction, weapons proliferation, or crime and narcotics. It can be a region, like Southeast Asia. It could be a country account, like Tunisia.

Those accounts fit within the various centers and directorates in the organization, based on either a regional view or a functional view. When I retired, we were trying to realign the directorate style of management, which was managed around career services, and integrate them into centers, which were managed around topics. The notion was that if you get somebody into a center, they can work on a bunch of different accounts, but it's all related topics and you don't lose the expertise as they move up in the system. I left before we saw what the outcome of that was, but I would hope it was positive. So you, Santi, show up and you're like, "Man, I am passionate about Latin America. Give me a Latin America account."

How did you know?

Just a guess. All right, let's start you out with Venezuela. It's a hot topic, they've got a natural resource: let's put you on that desk. Plus, it's enough to keep you busy, because it's a busy account. And then in time, you're like, "Well, the center of gravity for my interests is really Brazil. I want to go work on the Brazil account.” “Well, that's great, but you’ve got to go learn Portuguese.” You go to a language learning rotation, pick up Portuguese, go back to that account. You work on that account for a while.

If we had all of Latin America in one mission center, you could do all of that, without the mission center losing what you know about the account. Because, if I've read into the account, I know that you may have authored X number of papers that I just read, so I can walk down to your office and say, "Hey, this just happened. Did you ever confront this problem? What do you think this means?" The capacity to do that is part of the appeal of putting people together. The organization's knowledge hasn't evaporated, because I know that you're down the hall.

What was the model before that?

If you were in the Directorate of Operations, you were in operations. You may go to Latin America; your next tour will be Eastern Europe, then Africa, then Asia. All over the place. The tribal knowledge that you would get when you co-locate often is lost. You would hope to rotate those people into positions where they're training the next cadre. Sometimes it works, sometimes it doesn't. It's a lot more happenstance than purposeful in that model.

That old model seems more James Bond-y. The character goes more places for the movie at the cost of effectiveness.

A consistent problem is that the effectiveness measures are poorly articulated and poorly understood by both the consumers and the customers. The best consumer of intelligence that I have ever interacted with was Colin Powell. He had a very simple truism: "Tell me what you know, tell me what you don't know, then tell me what you think, so that I can parse out what you're saying and make sense of it.” He was a remarkably savvy consumer of intelligence.

Not all consumers are that savvy. Many of them would benefit from spending a little time learning more about the community, understanding the relationship with their briefers and analysts. The more engaged the policymakers are in learning about intelligence, the more savvy they'll get as consumers. Until then, you're throwing something over the transom and hoping for the best. It's not a great way to operate if you have consumers who want your product.

Who were some relatively poor consumers of intelligence information?

There are so many. Dick Cheney was not a poor consumer of intelligence. He just had an agenda, and he understood the discipline well enough to exercise that agenda. [Donald] Rumsfeld was not good. And [Paul] Wolfowitz was much worse at it than he thought. There were some others in that administration, and I don't mean to pick on them. There were plenty of lousy consumers under Obama and under Clinton. Not a lot of them take enough time to really think about what they're getting.

The biggest problem that I have found with ambassadors, generals, or other consumers is they'll go out into the world, shake hands with their counterpart, and decide based on that interaction that they understand their counterpart better than anybody else does. "I went to lunch with so-and-so, I should know." The problem is that so-and-so is not going to tell you the truth. If so-and-so is going to do something, going to lunch with him probably isn't going to be very revealing. He's probably going to tell you what you want to hear. You'd be surprised how many consumers don't even think about that possibility. It boggles my mind.

It is funny you mention Donald Rumsfeld as a poor consumer of information, because one of his famous truisms was, he wanted you to explain your “known knowns” and your “unknown unknowns.” My first impression would be that he’d be a good consumer.

The problem with the Rumsfelds and the Kissingers is that maybe they are the smartest person in the room, but maybe they should stop believing that for a while. That gets in their way. They just assume from the jump that they're smarter than everybody. Not just everybody individually, but everybody collectively. There's a certain amount of ego that goes along with all of this. When the ego gets sufficiently inflated, you reject information that is contrary to your own values, mental model, and thought processes. You assign outlier status to anything that doesn't conform with the way you think about a problem. That's expertise run amok.

That's where people like Rumsfeld or Kissinger come off the rails. They just assume, "Well, I'm smarter than everybody, so I'll figure it out. You just give me raw data." I have not seen a terribly successful model of that. It's better to walk into a room and assume that you're not remotely the smartest person there. You're doing yourself a cognitive disservice if you think you're cleverer than everybody else. It's a rookie mistake, but you see it over and over, and if it works for you and you keep getting promoted, eventually you start to believe it.

It doesn't seem like a rookie mistake to me. It seems like the mistake of a seasoned professional.

You're right. It is a longevity error.

Tomorrow, the president comes to you and says, "Rob, I don't trust my intelligence community folks anymore. I need you to clean up the place and you've got full reign to make whatever changes you think necessary. You've got political cover from above to fix the intelligence community." What's on your laundry list?

I'd have to understand what the principal's vision was. But, when Denny Blair was the Director of National Intelligence (DNI), he and I had known each other in a previous life. We would interact regularly and discuss the challenges facing the DNI. You are, in theory, the top boss of intelligence. The biggest challenge was budgetary authority didn't go with the job. The budgets all went out to the agencies. They weren't coordinated, which meant there were no levers for the DNI to pull. The best they could do was make recommendations and hope. They implemented a bunch of committees to corral some kind of decision-making process. That's slow, cumbersome, and difficult, and it's not always effective. I would want to see budgetary authority go to whoever’s in charge of the community, or whoever is at a cabinet-level seat.

[They also need] discretion about moving people around. We have this thing called joint duty, which is a requirement for promotion. You have to go spend a couple years outside of whatever organization you're from. We've seen a lot of positives from that. There are ways to do it that are a lot more fluid. As DNI, I should be able to reach out and grab a bunch of people who are experts on Latin America, because there's a cleanup on aisle eight on Venezuela. I grab everybody in the community who's working Venezuela. I don't have to negotiate or barter. I can make it happen. It's not very gee whiz, it's just hands-on stuff.

Our concept of security needs modernization.

What does that mean?

All secrets have a shelf life. There are no secrets that will last for infinity. The more people who are read into a program, the greater the likelihood that there's going to be a leak. But instead of recognizing that as ground truth, we pretend that we can hold onto this stuff for 25 or 50 years. It's a fool's errand. There are secrets that need to be held onto very tightly. But only a few. There are lots that are just, “It's a secret this week, but once this happens, there's nothing secret about it.” We spend an inordinate amount of energy on the "It's not going to be secret next week” problem. There's a part of me that thinks we're wasting energy we could be using in other ways. If there are 10 things we really have to keep secret, let’s do that. Let's put the resources to use on those areas that are critical.

It's an unpopular opinion amongst security professionals. It opens up the possibility of more insider threat, “Who's the next Snowden?” Having been outed by Snowden, I can appreciate that. But we're going to have to be real about resources. At some point, I have to sit down and say, “There's just not enough resources to be hyper-focused on everything. We've got to stick to what really matters.”

[For more on over-classification in American government, I enjoyed this essay from friend-of-the-pod Jon Askonas in The New Atlantis, and The Declassification Engine by Matthew Connelly.]

Any other unpopular changes you’ll make to the intelligence community in the Johnston regime?

I'm going to get in the weeds and I apologize in advance. Sherman Kent is arguably the father of US intelligence analysis. When the OSS [Office of Strategic Services, the forerunner of the CIA] was formed, he was going to be the poobah of analytics. Kent and his colleague, [Willmoore] Kendall, had a very specific disagreement about intelligence’s role in policy. Sherman Kent believed that policymaking should be divorced entirely from analysis. Kendall, on the other hand, thought that intelligence was political and that if it wasn't involved in the political process, it probably wasn't serving any particular administration.2

I would want to tackle the problem of politicization in intelligence. There are some things where you should be piped into policy. But there are some things where policy should not be part of your cognitive process. You need to divorce yourself from policy objectives in order to do analysis. That balance still has room to improve, is what I'm trying to say.

Give me a rough sketch of how you improve that? My impression of the intelligence community is that at least formally, they say, “We're just telling you information.” A lot of the criticism, especially over the last 8–10 years, has been, "You say that, but in practice, the job is riddled with political ideology.”

I have seen that played out myself, and that was with Iraqi WMD [Weapons of Mass Destruction]. I'm more sympathetic to the analysts in that problem/issue/failure, because, for the most part, there was a very stated political desire to invade Iraq. It was driven by people around Bush, and I think personally that had too much influence on the decisions that were being made in the community at the time.

I don't think you're the only person to think that.

We've seen that problem, that policy can drive you into bad analysis if you let it. I don't mean to pick on analysts, and I apologize to those who might be offended, but we’ve also seen the problem where bad analysis, like 9/11, has a lot more to do with cognitive failure in the community than with policy. People will argue, "That's not true." I lived through the commissions [investigating 9/11]. Trust me: it's true enough."

How do you do something about that? I firmly believe that there has got to be a willingness by policymakers to engage in some kind of bootcamp around intelligence. If you could get together policymakers and intelligence practitioners, professionals and engage in a regular conversation, they could start to have exchanges that were more grounded in the reality of each other's lives. That is a giant disconnect. Since we don't live in policy and they don't live in intelligence, it's always clunky. There must be ways we can come together and work out a better system, a better process for information provision and intake. It shouldn't be this difficult, but it is.

I think that's a great call for us to end on. So policymakers, if you're listening to this conversation, reach out to Dr. Rob Johnston and his colleagues in the intelligence community. We'll put together a little retreat in the woods.

I would hope that exposure would come in handy. I suspect that it is a problem of human distance.

NB: An air gap separates the most secure computer systems from systems with external access.

Johnston: “A guy named Jack Davis, who is a very well-respected analyst in the community, has written about that debate. I recommend Jack Davis's stuff. He's passed away, but he was wicked smart.”